Page updated:

March 22, 2021

Author: Curtis Mobley

View PDF

Autocovariance Functions: Theory

The preceding pages have described the statistical properties of random sea surfaces using variance spectra. This page begins the exploration of an alternate path to the specification of surface roughness properties. Clearly, wind-dependent variance spectra are applicable only to surface waves that are generated by wind. Consider, however, the surface of a flowing river. The river’s surface can have ripples or waves generated by turbulence resulting from unstable shear flow induced by flow over a shallow bottom, or by eddies created as the water flows around rocks in the river. These water surfaces do not depend on the wind speed and can have different statistical properties, hence different optical properties, than wind-roughened water surfaces. Such surfaces can be described by their autocovariance functions.

Autocovariance functions can be converted to elevation variance spectra via the Wiener-Khinchin theorem. This page shows how that is done. Once a given autocovariance function has been converted to its equivalent elevation variance spectrum, the algorithms of the previous chapters are immediately applicable, even though the variance spectrum is not wind-dependent. Indeed, this conversion enables the Fourier transform methods of the previous chapters to be used to generate random realizations of any surface, not just water surfaces.

As is often the case, there is a large gap between textbook theory—usually developed for continuous variables or an infinite sample size of discrete values—and its implementation in a computer program for a finite sample size of discrete variables. In particular, careful attention must be paid to sampling of an autocovariance function in order to obtain the corresponding variance spectrum, or vice versa. I find it disappointing and frustrating (but not surprising) that numerical matters such as the effects of finite sample size, maximum lag size, and exactly how to sample spectra or autocovariances (in particular, the material on page Autocovariance Functions: Sampling) never seems to be mentioned in textbooks on digital signal processing or related subjects. It is left to the innocent student to spend a few weeks of unfunded time figuring out why various numerical results are not internally consistent or do not perfectly match the textbook theory.

This page begins with a discussion of autocovariances, and then the Wiener-Khinchin theorem is stated. The theorem is numerically illustrated on the next page first using the wind-dependent Pierson-Moskowitz elevation variance spectrum, for which certain values can be analytically calculated and used to check the numerical results. The modeling of water surfaces generated by shear-induced turbulence is then illustrated, again using analytical functions that allow for a rigorous check on the numerical results.

Autocovariance

The autocovariance of is defined as

| (1) |

where denotes statistical expectation and is the spatial lag. This definition in terms of the expectation holds for both continuous and discrete variables. For the present discussion with being sea surface elevation, shows how strongly the sea surface elevation at one location is correlated to the elevation at a distance away. has units of , and is the variance of the surface elevation. The autocovariance is an even function of the lag: .

Consider an infinite sample of discrete zero-mean surface elevations spaced a distance apart. The autocovariance is then computed by (e.g., Eq. 2.6.3 of Proakis and Manolakis (1996) with a minor change in their notation)

Here is indexing the lag distance in units of the sample spacing . For a finite sample of discrete values, the same authors define the sample autocovariance by (their Eq. 2.6.11)

| (2) |

As usual, there are competing definitions. For a finite sample of discrete values, perhaps with a non-zero mean , the IDL autocorrelation function (A_CORRELATE) uses

| (3) |

where

is the sample mean. Matlab computes the autocovariance via

Note that the lag must be less than the length of the sample. (That is, the sample locations are at and the allowed lag distances are .) Note also the factor of in the IDL definition (3), which does not appear in Eq. (2), and which is a factor of in the Matlab version.

Nor is there even any consensus on the terms “autocovariance” and “autocorrelation.” Some authors (and this page) define the nondimensional autocorrelation as the autocovariance normalized by the variance, i.e.

| (4) |

However, Proakis and Manolakis (1996) call the autocovariance as used here the autocorrelation, and they call the autocorrelation of Eq. (4) the “normalized autocorrelation.” These sorts differences in the definitions and computations of autocovariances can can cause much grief when comparing the numerical outputs of different computer codes, or numerical outputs with textbook examples.

The Wiener-Khinchin Theorem

Now that the autocovariance has been defined, the Wiener-Khinchin theorem can be stated: The Fourier transform of the autocovariance equals the variance spectral density function. Symbolically,

| (5) |

Indeed, some texts define the spectral density as the Fourier transform of the autocovariance. The inverse is of course

| (6) |

Here is a two-sided spectral density function as discussed on several previous pages (e.g., Surfaces to Spectra: 1D).

It is important to note (as emphasized by the subscript on the Fourier transform operator ) that the theorem is written with the version of the Fourier transform (Eq. 1 of the Fourier Transforms page), and the density function is written in terms of the spatial frequency , which has units of 1/meters. (In the time domain, the conjugate variables are time in seconds and frequency in 1/seconds = cycles/second = Hz.) The spectral density function for the angular spatial frequency (or angular temporal frequency in radians per second in the time domain) can be obtained by noting that corresponding intervals of the spectral densities contain the same amount of variance:

which gives

| (7) |

Note that varies from to and, likewise, or ranges over all negative and positive values. The variance spectrum obtained from the Fourier transform of an autocovariance function is therefore a two-sided spectrum.

Comment: In light of Eq. (7), the theorem stated in terms of angular spatial frequency appears to be

| (8) |

with the inverse

| (9) |

I say “appears to be” because I’ve never actually seen the theorem written this way because the textbooks all seem to stick with and (or and in the time domain). As Press et al. (1992) say in Numerical Recipes (p. 491), “There are fewer factors of to remember if you use the ( or) convention, especially when we get to discretely sampled data....” In any case, Eqs. (8) and (9) are consistent with the spectrum of Eq. (17) discussed below.

The theorem is usually proved in textbooks for continuous variables and . However, in numerical application to a finite number of discrete samples, discrete variables and or are used, and proper attention must be paid to pesky factors of , , and bandwidth, and to the array ordering required by a particular FFT routine.

The continuous-variable Fourier transform in Eq. (5) gives a spectral density with units of . However, if the theorem is written for a DFT of discrete data ,

| (10) |

then the resulting discrete spectrum has units of . Just as was discussed on the Fourier Transforms page, this discrete spectrum must be divided by the bandwidth in order to obtain the density at . That is,

| (11) |

Example

Horoshenkov et al. (2013) (their Eq. 4) give an analytic formula for the autocorrelation function of surface waves generated by bottom-induced turbulence in shallow flowing water. In the notation of this page, this function is

| (12) |

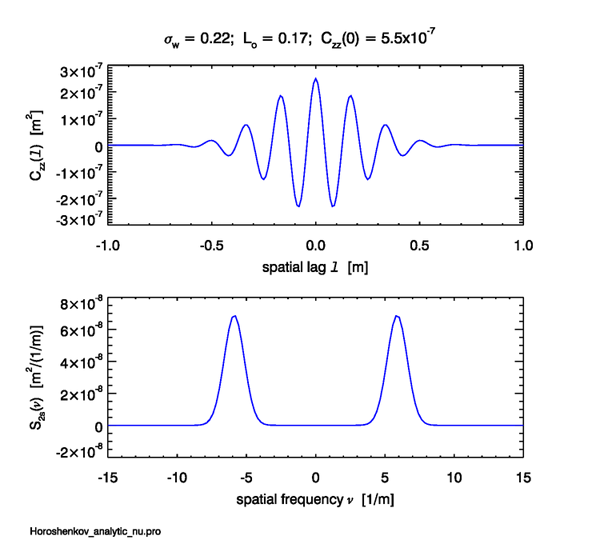

In their words, “ relates to the spatial radius of correlation (correlation length)” and “ relates to the characteristic period in the surface wave pattern.” The average values for the physical conditions of the Horoshenkov et al. study are and . [Note: Eq. (12) is Horoshenkov’s as shown in their abstract and in their conclusions, where it has a factor of 1/2 in the exponential. Their Eq (4) does not have the 1/2. This is probably a typo since Gaussians usually have the form .]

This autocorrelation function provides a nice example of how to use the Wiener-Khinchin theorem to obtain the corresponding variance spectrum. Equation (12), when converted to an autocovariance via a factor of the variance, , has the form

where and . This function has an easily computed analytical Fourier transform.

The continuous-variable Fourier transform of this is given by Eq. (1) of the Fourier Transforms page:

| (14) |

Here and are continuous variables; has units of , which is interpreted as as explained before. Note that this variance spectral density is two-sided, i.e., . Expanding the complex exponential via gives

The imaginary term is zero because the integrand is an odd function of . Using the identity

gives

The integral

gives the Fourier transform of the of Eq. (13):

| (15) |

This variance spectrum has maxima at , where the value is very close to . Figure 1 plots this (Eq. 13) and (Eq. 15) for typical values of , , and . Note that the sub peaks of the autocovariance lie at integer multiples of , and that the peaks of the spectrum are at .

By definition, the integral over all frequencies of an elevation variance spectral density gives the total elevation variance :

This can be computed analytically for the spectrum of Eq. (15). The spectrum of Eq. (15) is the sum of two identical Gaussians centered at different values. Consider the one centered at , which involves the exponential with the term. The total variance is then twice the integral of this Gaussian:

Letting gives

where . The integral

then gives the final result:

| (16) |

Thus starting with a variance of in the autocovariance of Eq. (13), obtaining the variance spectral density from the Fourier -transform of the autocovarience, and then integrating the variance spectral density over thus gives back the variance as originally specified in the autocovariance function.

However, if the above process is naively carried through starting (as in Eq. 14) with the -transform of Eq. (3) on the Fourier Transforms page, the end result (as in Eq. 16) is . This extra factor of is rectified by the factor seen in Eq. (7). Thus the version of the Horeshenkov spectral density is

| (17) |

Integration of this over all then results in , as required. The inverse transform of the spectral density (17) gives , as expected from Eq. (9).

See comments posted for this page and leave your own.

See comments posted for this page and leave your own.